The world-class suite of productivity tools for the Office known to the world which includes Word, Excel, PowerPoint, Outlook, OneNote, Publisher and more.

Microsoft Office 2016 is a trial document editor app and productivity tool, developed by Microsoft for Windows. It's pretty customizable. It connects to OneDrive.

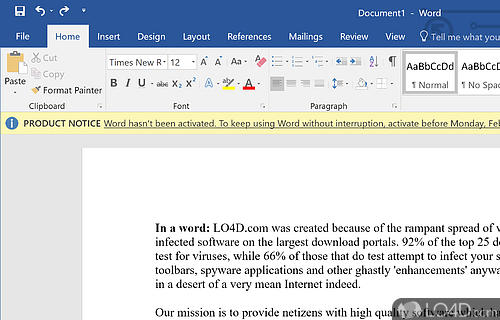

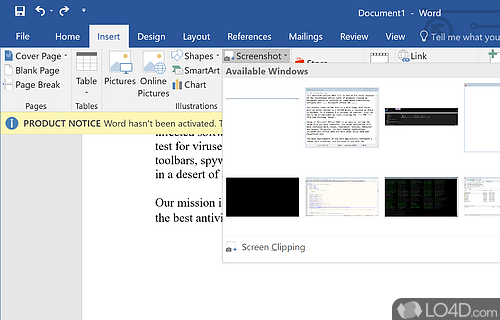

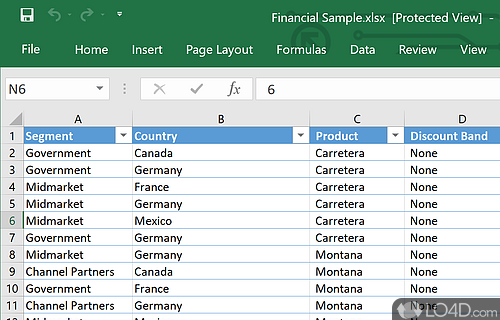

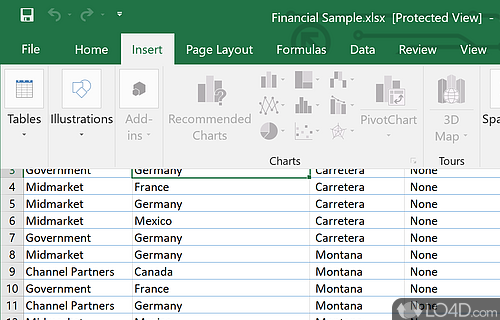

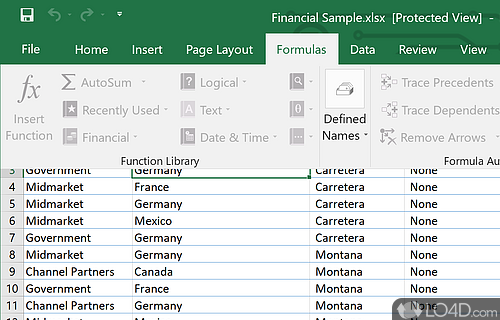

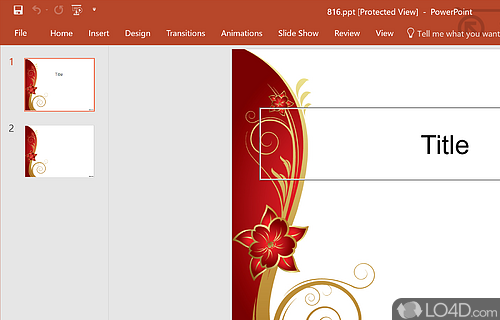

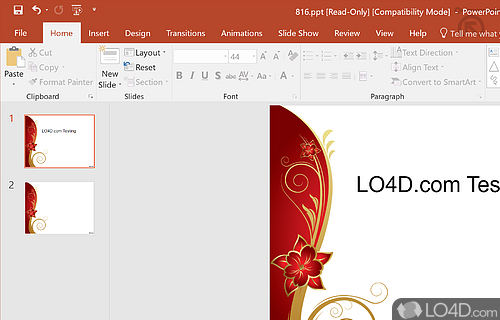

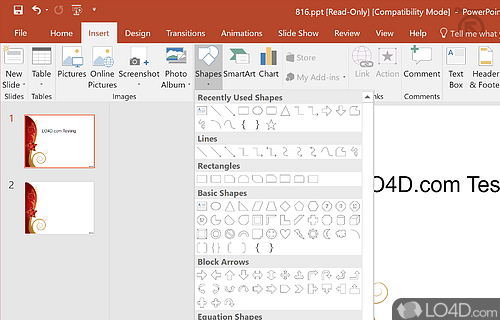

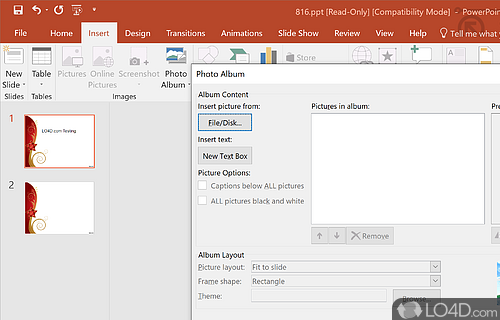

The download has been tested by an editor here on a PC and a list of features has been compiled; see below. We've also created some screenshots of Microsoft Office 2016 to illustrate the user interface and show the overall usage and features of this document editing program.

The well-known suite by Microsoft containing Word, Excel, Powerpoint

Microsoft Office is an office suite of desktop applications, servers and services for the Microsoft Windows and OS X operating systems. Office contained Microsoft Word, Microsoft Excel and Microsoft PowerPoint. Over the years, Office applications have grown substantially closer with shared features such as a common spell checker

The current versions are Office 2013 for Windows, released on October 11, 2012. A 60-day trial version of Office 2013 Professional Plus was released for download.

Features of Microsoft Office 2016

- Advanced Outlook: Stay organized and access information quickly with improved email capabilities.

- Enhanced Security: Safeguard your documents with improved security measures.

- Excel Maps: Create interactive maps and visualize your data.

- Excel Power Query: Transform and analyze data faster with Power Query.

- Improved Accessibility: Easily access files from anywhere with support for OneDrive and SharePoint.

- Intelligent Inking: Use your digital pen to annotate documents and create diagrams.

- Office Mobile Apps: Stay productive on the go with the Office 365 mobile applications.

- Outlook Groups: Streamline conversations and easily collaborate across teams.

- PowerPoint Designer: Generate visuals for your slides with the help of AI.

- Powerful Search: Quickly find the content you need with advanced search capabilities.

- Real-Time Collaboration: Work together with colleagues in real-time with shared documents.

- Smart Applications: Utilize AI-driven features to improve the way you work.

- Smart Lookup: Get relevant contextual information from the web.

- Themes: Customize the look and feel of your documents with modern themes.

Compatibility and License

This download is licensed as shareware for the Windows operating system from office software and can be used as a free trial until the trial period ends (after an unspecified number of days). The Microsoft Office 2016 2403.17425.20176 demo is available to all software users as a free download with potential restrictions and is not necessarily the full version of this software.

What version of Windows can Microsoft Office 2016 run on?

Microsoft Office 2016 can be used on a computer running Windows 11 or Windows 10. Previous versions of the OS shouldn't be a problem with Windows 8 and Windows 7 having been tested. It comes in both 32-bit and 64-bit downloads.

Other operating systems: The latest Microsoft Office 2016 version from 2024 is also available for Mac.

Filed under: